The use of AI in addiction treatment is expanding fast-whether through predictive analytics, digital relapse prevention tools, or even AI counseling that guides patients between therapy sessions. Researchers are also exploring AI-driven drug discovery to develop safer medications for dependence and withdrawal.

On the surface, this sounds like progress we desperately need. With the rising demand for alcohol addiction treatment, drug addiction treatment, and even flexible outpatient addiction treatment, technology promises to ease pressure on overburdened clinicians and make de addiction treatment more accessible. For families, it hints at faster answers. For clinicians, it offers new ways to keep patients engaged. And for patients themselves, it creates the possibility of support that’s available anytime, anywhere.

But beneath the promise lie real concerns. The limitations of AI in mental health care aren’t small details, they touch on safety, trust, and dignity. A chatbot that misunderstands distress could delay lifesaving intervention. Predictive models built on biased data might overlook vulnerable groups. Sensitive health records, once digitized, can never be fully “locked away.”

What’s at stake here goes far beyond convenience. It’s about the well-being of people navigating some of the hardest moments of their lives. It’s about the confidence families place in the care system. It’s about the responsibility clinicians carry every day. And ultimately, it’s about how society chooses to use artificial intelligence and mental health tools: as a way to genuinely support recovery, or as a shortcut that risks doing more harm than good.

Ethical Boundaries and Regulatory Gaps in AI Counselling for Addiction Treatment

AI is opening new doors in addiction treatment services, but it also raises difficult questions. The same abilities that make it powerful, make it risky in alcohol addiction treatment, drug addiction treatment, and broader mental health care. To see the dangers clearly, we need to examine the ethical and regulatory challenges it creates.

Patient Privacy & Data Security in Addiction Treatment

Few things are more sensitive than information about substance use. Records from an addiction treatment center, contain deeply personal details that patients often share with hesitation. When these records are processed by AI, the risk of data breaches, misuse, or even re-identification becomes a serious concern. Unlike other areas of healthcare, exposure here doesn’t just mean a medical privacy violation. Current regulations like HIPAA, along with patchwork global standards, struggle to keep pace with how quickly AI in addiction treatment is advancing.

Transparency and Explainability in AI-Powered De Addiction Treatment

One of the most pressing challenges in AI mental health applications is the black box dilemma. Many AI models make recommendations without offering clear explanations of how decisions were reached. For patients undergoing de addiction treatment, this lack of clarity complicates informed consent. If an algorithm suggests a certain therapy path or flags someone as high risk for relapse, both patients and clinicians deserve to understand why.

Accountability and Legal Liability in AI Counseling

When an AI system makes a harmful or misguided recommendation in the context of AI counseling or clinical care, the question becomes: who is responsible? Is it the developer who designed the algorithm, the clinician who used it, or the institution that deployed it? Currently, there are no clear legal or ethical frameworks to address these scenarios. If a patient in alcohol addiction treatment were to be harmed due to an AI-generated suggestion, liability would be difficult to establish.

The Human Cost: Clinical Risks in AI Counselling

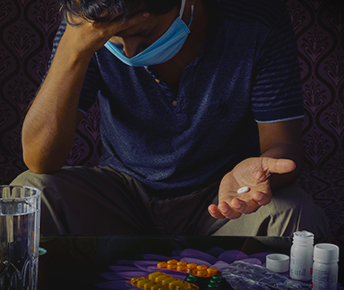

Beyond privacy and regulation, the most pressing dangers of AI in addiction treatment emerge in the clinic itself. When technology shapes decisions about recovery, the risks are no longer theoretical, they directly affect patient safety, trust, and outcomes.

Inaccuracy, Misdiagnosis, and Harmful Advice

AI models are trained on patterns in data, not on the lived experience of recovery. This means they can easily miss subtle warning signs or, worse, provide unsafe suggestions. Imagine a patient in alcohol addiction treatment reaching out to an AI chatbot during a moment of crisis. If the system fails to detect suicidal intent or misinterprets their words, the delay in proper intervention could have devastating consequences.

Overreliance on AI Counseling and Delayed Professional Help

Another danger lies in mistaking AI for a full substitute for professional care. Patients may turn to digital tools for reassurance instead of seeking timely help from an addiction treatment center. Families, too, might place undue faith in predictive apps or AI counseling platforms, assuming they offer the same safety net as a trained therapist. This overreliance can delay real therapeutic intervention, making conditions worse before human clinicians step in.

Lack of Personalization and Nuance

Addiction is deeply personal, shaped by biology, psychology, environment, and culture. Yet most AI tools still deliver one-size-fits-all responses. For someone in outpatient addiction treatment, a generic coping tip may feel disconnected from their reality. In de addiction treatment, where progress often depends on tailoring strategies to each patient’s evolving needs, the rigidity of current AI systems risks leaving people feeling unseen and unsupported.

Also read: Why Professional Help from Addiction Treatment Center Matters

The Social Stakes of AI in Addiction Treatment

The societal and systemic risks of AI, underscore that technology alone cannot solve the challenges of addiction care. To truly harness the potential of AI in addiction treatment while protecting patients, we need a thoughtful, patient-centered approach that combines innovation with ethical oversight, clinical expertise, and human judgment.

Reinforcement of Stigma

AI systems learn from existing data, and if that data reflects societal biases, those biases get amplified. In the context of addiction treatment services, this can mean perpetuating harmful stereotypes about who is at risk or who deserves care. Patients seeking alcohol addiction treatment or drug addiction treatment may find themselves unfairly labeled or categorized based on flawed AI predictions..

Commercial Exploitation and Marketing Ethics in AI Counseling & Addiction Centers

Another societal concern is how AI is increasingly used to drive marketing and lead generation in the addiction treatment space. Algorithms can identify vulnerable individuals struggling with substance use and target them with ads for addiction treatment centers or services, often prioritizing profit over patient well-being. While AI counseling and predictive tools promise support, they can blur the line between care and commercial gain, raising ethical questions about data use and patient benefit.

CONSULT HERE

Conclusion

The next decade will determine whether AI in addiction treatment becomes a trusted ally or a cautionary tale. Emerging technologies like adaptive learning models, multimodal health data integration, and digital biomarkers could open new frontiers in alcohol addiction treatment, drug addiction treatment, and broader mental health care. But their success will depend on more than technical progress. It will hinge on how responsibly they are designed, governed, and applied.

The future of addiction treatment services lies not in replacing human care, but in creating systems where technology amplifies the reach and impact of skilled professionals. If AI is developed with transparency, equity, and patient dignity at its core, it could help make de addiction treatment more accessible, personalized, and effective. If not, it risks deepening mistrust and widening the very gaps it aims to close.

What comes next will be defined not just by algorithms, but by the choices of clinicians, policymakers, and innovators. The question is not whether AI will be part of the future of recovery. It already is. But whether we will shape it with the wisdom, compassion, and foresight that addiction care demands.

At Samarpan Recovery, our commitment remains rooted in people first. Technology may assist, but it is the human connection, empathy, and clinical expertise that truly transform lives. If you or a loved one is seeking support, we’re here to walk the path of recovery with you.

Frequently Asked Question

1. How is AI used in treatment?

AI in addiction treatment supports alcohol addiction treatment and drug addiction treatment through predictive analytics, chatbots, and relapse prevention tools.

2. How is AI used in rehabilitation?

In a modern addiction treatment center, AI aids de addiction treatment by monitoring patient progress, personalizing recovery plans, and supporting outpatient addiction treatment.

3. What's the best AI to use for therapy?

There isn’t a single best tool, AI counseling apps and platforms can complement therapy, but they work best when integrated with professional addiction treatment services.

4. Can AI replace physical therapist?

No, AI may assist with exercises or monitoring, but like in artificial intelligence and mental health, it cannot replace the expertise and human connection of a physical therapist.

Yes, many offer serene environments and solid therapeutic frameworks. However, quality varies, so it’s essential to research accreditation, staff credentials, and therapeutic depth.

Yes, many offer serene environments and solid therapeutic frameworks. However, quality varies, so it’s essential to research accreditation, staff credentials, and therapeutic depth.